Mastering dbt Testing for Your Cloud Database (Best Practices)

When it comes to maintaining data integrity and accuracy in cloud-based data warehouses like Amazon Redshift, Google BigQuery, or Snowflake, the Data Build Tool (dbt) has emerged as a powerful solution adopted by data teams. In this guide, we aim to provide an in-depth understanding of the importance of dbt testing in data engineering, explore various types of dbt tests, and offer effective strategies to incorporate these tests into your data workflow.

Why is dbt Testing Essential in Cloud Data Warehousing?

dbt revolutionizes data transformations by allowing data professionals to perform them directly within the data warehouse, leveraging the efficiency and SQL capabilities of modern cloud-based data warehouses like Snowflake, BigQuery, or Redshift. Testing in dbt becomes paramount to ensure data quality and consistency within these cloud data warehouses. Without proper testing, issues such as invalid data types, missing values, or broken relationships between tables can compromise the reliability of data analysis, potentially leading to erroneous business decisions.

dbt revolutionizes data transformations by allowing data professionals to perform them directly within the data warehouse, leveraging the efficiency and SQL capabilities of modern cloud-based data warehouses like Snowflake, BigQuery, or Redshift. Testing in dbt becomes paramount to ensure data quality and consistency within these cloud data warehouses. Without proper testing, issues such as invalid data types, missing values, or broken relationships between tables can compromise the reliability of data analysis, potentially leading to erroneous business decisions.

The Business Value of dbt Testing

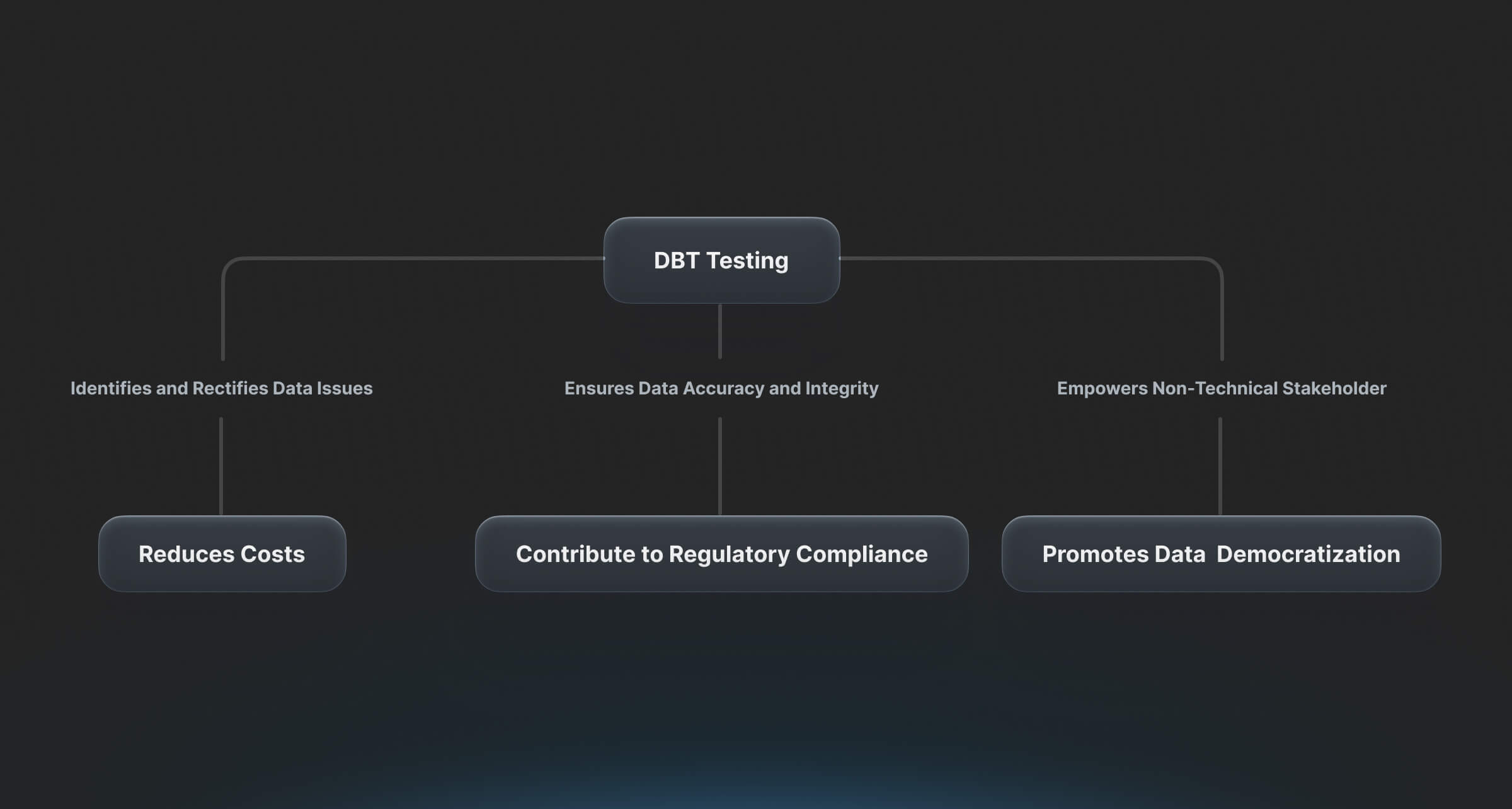

Beyond the technical aspects, dbt testing brings significant business value, directly impacting the bottom line. By leveraging dbt testing to ensure accurate and reliable data, organizations gain the confidence to make data-driven decisions that drive business growth and profitability. Dbt testing also helps in reducing costs by identifying and rectifying data issues early on, minimizing the risk of financial losses and operational inefficiencies.

Beyond the technical aspects, dbt testing brings significant business value, directly impacting the bottom line. By leveraging dbt testing to ensure accurate and reliable data, organizations gain the confidence to make data-driven decisions that drive business growth and profitability. Dbt testing also helps in reducing costs by identifying and rectifying data issues early on, minimizing the risk of financial losses and operational inefficiencies.

Additionally, dbt testing contributes to regulatory compliance, enabling organizations to meet data accuracy and integrity requirements. It also promotes data democratization by empowering non-technical stakeholders to confidently access and utilize data.

One supporting business case study that highlights the business value of dbt testing is the success story of Condé Nast. By leveraging dbt Condé Nast was able to create an evergreen platform that allowed their teams around the world to work from the same data sets. This resulted in higher productivity and greater trust in their data, enabling them to become a global enterprise. The use of dbt testing ensured that 100% of their models were shipped with tests, ensuring data accuracy and reliability.

Another case study that showcases the benefits of dbt testing is the Nutrafol case study. Nutrafol's data team was able to save over 100 hours per month by using automated dbt testing. This allowed them to efficiently review dbt code changes and ensure the quality and integrity of their data.

These case studies demonstrate how dbt testing brings significant business value by enabling organizations to make data-driven decisions, reducing costs by identifying and rectifying data issues early on, ensuring regulatory compliance, and promoting data democratization. By leveraging dbt testing, organizations can gain the confidence to drive business growth and profitability.

PopSQL, with its dbt Core integration, is poised to make a significant difference to businesses implementing dbt testing. Here's how:

- Enhanced SQL Editor: PopSQL provides a SQL editor with built-in dbt Core support, offering a seamless and intuitive environment for writing and executing SQL queries. This feature streamlines the process of working with dbt and allows users to leverage the power of dbt directly within the SQL editor.

- Macros and Models Creation: With PopSQL's dbt Core integration, users can create macros and models within the SQL editor. Macros are reusable pieces of SQL code that can be used to eliminate repetitive SQL, making query development more efficient. Models, on the other hand, simplify BI queries by abstracting complex SQL logic into easily understandable and reusable components.

- Data Catalog: PopSQL enables the creation of a data catalog backed by dbt Core. This feature helps businesses organize and document their data assets, making it easier for users to discover and understand available data resources. A well-structured data catalog enhances data accessibility and promotes data democratization within the organization.

- Collaboration and Sharing: PopSQL offers collaboration features that facilitate teamwork and knowledge sharing. Business users can easily analyze data without needing technical developers, thanks to PopSQL's crisp and clean UI editor. This promotes cross-functional collaboration and empowers non-technical stakeholders to access and utilize data effectively.

Overall, PopSQL's dbt Core integration provides businesses with a comprehensive solution for implementing dbt testing. It enhances the SQL editing experience, enables the creation of macros and models, simplifies BI queries, facilitates the creation of a data catalog, and promotes collaboration and knowledge sharing. By leveraging PopSQL, businesses can maximize the benefits of dbt testing and drive data-driven decision-making processes.

Getting Started with dbt Tests: Schema Tests and Bespoke Data Tests

dbt provides a set of built-in schema tests, including Unique, Not Null, Accepted Values, and Relationships. These tests are crucial for checking the integrity of your data and ensuring data models are accurate and reliable.

The Unique test verifies the uniqueness of entries in a column, maintaining data integrity.

The Not Null test ensures that a column in a model doesn't contain any null values, preventing gaps in your data.

The Accepted Values test ensures that all values in a column fall within a specified set of acceptable values, maintaining consistency across data entries.

The Relationships test verifies the integrity of relationships between tables by checking the correctness of foreign keys.

In addition to the built-in schema tests, dbt allows you to craft bespoke data tests using custom SQL queries to test specific conditions. These tests provide flexibility and precision, enabling enterprises to address unique, business-specific data scenarios that may go beyond standard schema tests. With tools like PopSQL, writing and managing bespoke data tests becomes even more seamless, allowing data teams to efficiently craft and fine-tune these tests.

Unit Testing in dbt: Ensuring Every Piece Functions Perfectly

Unit testing in dbt is a crucial step in ensuring the quality and reliability of your data models. It involves writing SQL scripts to test individual components of your data models, verifying that they function as expected. These unit tests act as guards, preventing downstream data errors by validating each piece of the data pipeline.

Here are some key points about unit testing in dbt:

- Purpose: The purpose of unit testing in dbt is to validate the logic and functionality of individual data models. It helps catch errors and inconsistencies early in the development process, reducing the risk of data issues downstream.

- Mocking Dependencies: In dbt, you can test models independently by mocking their dependencies. This means creating mock inputs for the models and checking the results against the expected output. By isolating each model, you can ensure that it performs correctly regardless of the data it depends on.

- dbt Unit Testing Package: There is a dbt package called "dbt-unit-testing" that provides support for unit testing in dbt. This package contains macros that can be reused across dbt projects to facilitate unit testing.

- Tools and Frameworks: There are various tools and frameworks available to implement unit testing in dbt. For example, you can use PopSQL, a user-friendly SQL editor, to conveniently write and execute unit tests. It provides a collaborative environment for teams to work together on testing.

- Benefits: Unit testing in dbt offers several benefits. It helps ensure the accuracy and reliability of your data models, reduces the risk of data errors, and improves the overall quality of your data pipeline. It also promotes collaboration and knowledge sharing among team members.

To implement unit testing in dbt, you can follow these steps:

- Identify the components of your data models that need to be tested.

- Write SQL scripts to create mock inputs and define the expected output.

- Use the dbt unit testing package or other tools/frameworks to execute the tests.

- Analyze the test results and address any issues or discrepancies found.

- Repeat the process as you make changes or additions to your data models.

By incorporating unit testing into your dbt workflow, you can ensure that every piece of your data pipeline functions perfectly, leading to more reliable and accurate data analysis.

With PopSQL, teams can conveniently write and execute unit tests using its user-friendly SQL editor and collaborate effectively. Here's how PopSQL supports unit testing and collaboration:

- Collaborative SQL Editor: PopSQL provides a collaborative SQL editor that allows multiple team members to work together on writing and executing unit tests. This editor enables real-time collaboration, making it easy for team members to contribute and review each other's code.

- Query Sharing: PopSQL allows you to share query links, which means you can share a URL for your unit test query instead of copying and pasting SQL code over chat or email. This makes it convenient for team members to access and review the unit tests.

- Data Visualization: PopSQL offers data visualization capabilities, allowing you to visualize the results of your unit tests. This can be helpful in quickly identifying any issues or discrepancies in the data models being tested.

- Integration with Slack: PopSQL integrates with Slack, a popular team communication platform. This integration enables you to push key metrics and updates from your unit tests to Slack, keeping your team members up to date on the testing progress.

Overall, PopSQL provides a user-friendly and collaborative environment for teams to write and execute unit tests. Its features like real-time collaboration, query sharing, data visualization, and Slack integration enhance the efficiency and effectiveness of unit testing in dbt.

Test-Driven Development (TDD) in dbt: Quality Assurance from the Get-Go

Test-Driven Development (TDD) in dbt is a development approach that emphasizes quality assurance from the beginning of the development process. Here is a breakdown of this approach:

- Writing tests before implementing the code: With TDD, developers write tests for the desired behavior of the code before actually writing the code itself. These tests serve as a specification for how the code should function and what the expected outcomes should be.

- Ensuring transformations meet defined requirements: By writing tests upfront, TDD ensures that the data transformations implemented in dbt meet the defined requirements. This helps catch potential issues early on and ensures that the code produces the desired results.

- Promoting maintainability: TDD promotes maintainability by encouraging developers to write modular and testable code. By breaking down the code into smaller units and writing tests for each unit, it becomes easier to understand, modify, and extend the codebase in the future.

- Facilitating future code refactoring: With TDD, developers can refactor the code with confidence. Since there are tests in place that verify the expected behavior, any changes made during refactoring can be validated against these tests to ensure that the code still functions correctly.

Overall, TDD in dbt brings quality assurance to the forefront of the development process. By writing tests before implementing the code, developers can ensure that transformations meet defined requirements, promote maintainability, and facilitate future code refactoring. This approach helps improve the overall quality of the codebase and reduces the likelihood of introducing errors or regressions.

dbt Test Configurations and Management: Keeping Your Testing on Point

Managing dbt tests is a critical responsibility in an enterprise setting. It involves maintaining the test directory, tracking test results, and understanding the causes of test failures. Errors in data transformation can have significant consequences, making dbt test management an indispensable part of any data engineering strategy. Tools like PopSQL can serve as reliable allies, allowing data teams to write, run, and share SQL scripts for effective test management.

dbt Test Severity Levels and Prioritization: Making Every Test Count

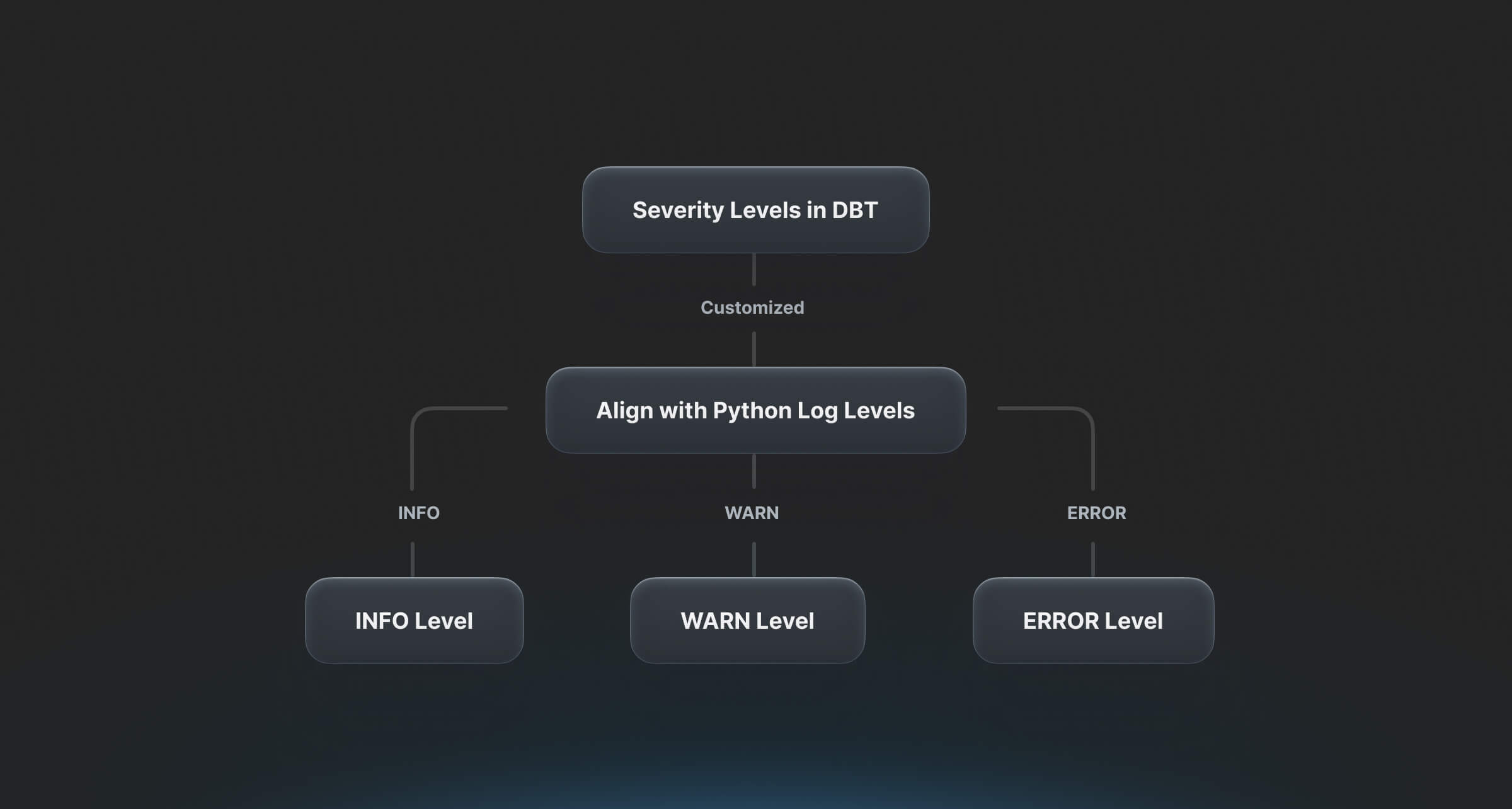

In the world of data testing, not all tests carry the same weight. Assigning severity levels to dbt tests helps identify which tests have a more significant impact on business operations. By prioritizing critical issues that require immediate attention and resolution, organizations can effectively manage their data quality.

dbt (data build tool) allows for the configuration of test severity levels. These severity levels determine the status of a test based on the number of failures it returns. Typically, a test returns the count of rows that fail the test query, such as duplicate records or null values. If the number of failures is non-zero, the test returns an error by default. However, it is possible to configure tests to return warnings instead of errors or to make the test status conditional on the number of failures returned.

The severity levels in dbt can be customized to align with typical Python log levels, such as INFO, WARN, and ERROR. This allows for semantic clarity and easier understanding of the severity of each test.

Automating severity levels is crucial to efficiently handle data issues. Manually going through all the steps to identify and address data issues can be time-consuming and prone to errors. By automating severity levels, organizations can streamline the process and ensure that critical issues are promptly addressed.

Implementing dbt tests is an effective way to proactively improve data quality.

However, scalability can be a challenge when dealing with a large number of tests. It is important to start with generic tests, leverage helpers and extensions, and model with testing in mind. Testing data sources, integrating alerting systems, establishing clear ownership of tests, and creating testing documentation are also important best practices.

In summary, assigning severity levels to dbt tests allows organizations to prioritize critical issues and effectively manage data quality. By automating severity levels and following best practices, organizations can ensure reliable and accurate data for their operations.

Monitoring Your dbt Tests: From Reactive to Proactive Data Quality Management

Data quality management should be a proactive and ongoing effort in an enterprise setting. Dbt enables storing test results directly in the data warehouse, allowing for analysis and monitoring of test performance over time. This approach facilitates a shift from reactive to proactive data quality management, where patterns and trends are understood, and data quality is proactively enhanced.

With this comprehensive guide to dbt testing in SQL for your cloud database, you'll gain a deep understanding of best practices, real-world examples, and the business value that dbt testing brings to data engineering. By mastering dbt testing, you can ensure the integrity, accuracy, and reliability of your data, empowering your organization to make data-driven decisions and drive business growth.