How to Write dbt Macros using Jinja

Introduction

In the fast-paced world of data analytics, continuous learning and adapting to new technologies makes a huge difference in efficiency. For those who already recognize the value of dbt, diving deeper into its mechanics, such as dbt macros, can offer an edge. If you're a leader in data or a hands-on expert like a data engineer or analyst, learning about dbt macros and their interplay with Jinja can be extremely beneficial. With the added benefit of tools like PopSQL on top of dbt Core, this process has become even more streamlined. In this post, we'll explore the intricacies of dbt macros, understanding their core components, the magic of Jinja, and how PopSQL's dbt integration can transform your macro creation and testing.

What is a dbt macro?

A dbt macro, within the dbt framework, works like a function in traditional programming. It allows users to encapsulate specific, often repetitive, SQL logic into a named, callable unit. Just as you'd use a function in programming to avoid writing the same code repeatedly, a dbt macro lets you define a particular SQL operation once and then call upon that operation wherever you need it in your dbt project.

By leveraging macros, analysts and data engineers can maintain cleaner, more modular, and consistent codebases in their data transformation workflows. Think of it as a way to give SQL supercharged, function-like capabilities within the realm of dbt!

How do macros work?

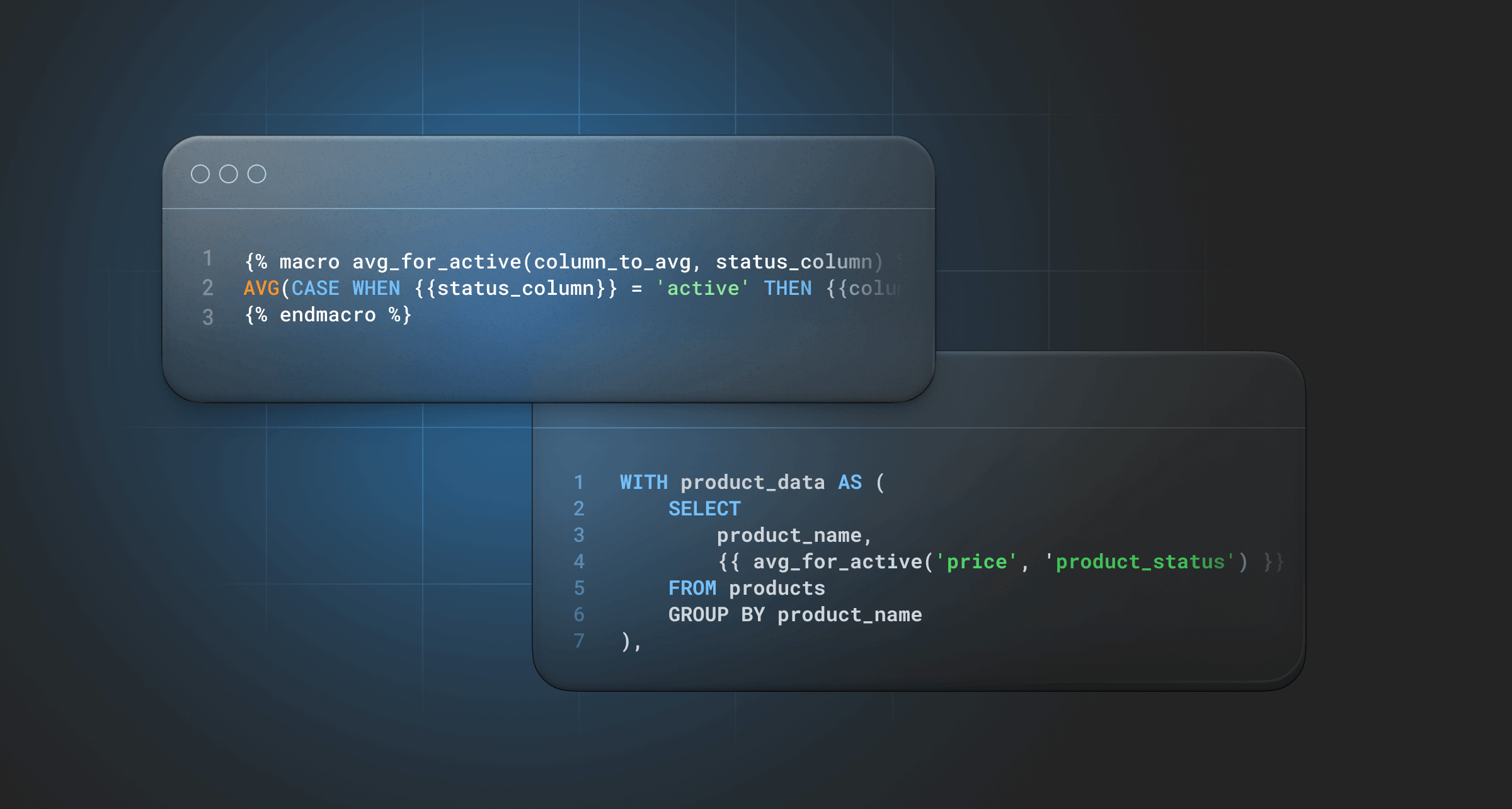

dbt macros use Jinja, a templating engine that is widely adopted in Python web frameworks. With Jinja, you can embed placeholders and logic directly into SQL code, making it dynamic and modular.

In a dbt macro, you can define a block of SQL with parameters. This block can then be reused throughout your dbt project with different arguments.

Example: a simple dbt macro

Let’s go through a super simple example. In this example we need to adjust all timestamps that are currently stored without a timezone, over to US Pacific time, since that’s the timezone the team has requested for all data analysis. We know those timestamps are currently in UTC time, so we can create a reusable macro to convert timestamps for us. First, create a new macro file under the macros folder in your dbt project with this code:

{% macro to_reporting_timezone(column_name) %}

convert_timezone('UTC', 'America/Los_Angeles',

{{column_name}}::timestamp_ntz)

{% endmacro %}When you use this macro in one of your dbt models and pass in a UTC timestamp column as an argument, Jinja replaces the placeholder {{ column_name }} with the argument provided. So, invoking {{ to_reporting_timezone('created_at') }} would produce:

convert_timezone('UTC', 'America/Los_Angeles',

created_at::timestamp_ntz)This new macro can be used across all your models. It’s super convenient when creating new models that also need to convert timestamps to the correct timezone. Plus, if you ever need to adjust the timezone used for reporting, you only need to change it in that one macro, rather than a bunch of individual model files.

By using dbt macros with the Jinja templating engine, SQL code becomes more reusable, maintainable, and modular. It allows data teams to avoid redundancy, enforces consistency, and streamlines the development process.

Jinja variables and placeholders in dbt macros

Variables

Syntax: % set... %

When using Jinja in dbt macros, you can use set to assign values to variables that can be referenced or reused in your macro. In the example below, a variable called partners is defined that contains a list of three partners that resell our products. We’ll come back to this variable when we review for loops later in this article.

{% set partners = ['costco', 'sams_club', 'target'] %}Placeholders

Syntax: {{...}}

Anything enclosed between double curly braces is evaluated and replaced with its value. In dbt, the most common use of this syntax is for the functions ref() and source(). In the example below, the users model is referenced.

select

organization_id,

min(created_at) as first_user_created_at

from {{ ref('users') }}

group by organization_id;The ref('users') is a dbt function that gets replaced with the actual reference to the view or table that is defined in the users model of your dbt project. When the above code gets compiled, it looks like this:

select

organization_id,

min(created_at) as first_user_created_at

from dbt.users

group by organization_id;dbt Jinja syntax in macros

Next, let’s review Jinja syntax in the context of creating dbt macros. Jinja provides the building blocks for macros, so a strong understanding of Jinja syntax is key to writing the best possible macros.

Comments

Syntax: #...#

Anything between {# and #} is a comment and will not be included in the final SQL. This is usually only necessary when you need to document something specific about your Jinja logic. You should prioritize documenting macro and input descriptions in dbt schema files rather than code comments.

{# this text will appear in your file but not in the compiled output #}If statements

Syntax: {% if... %} … {% endif %}

This syntax is used for simple conditionals (if statements) to add/remove lines of SQL. For example, in the dbt macro example below, the where statement only gets added to the query if the condition is met. In this case, the condition is that the model is being run incrementally. If the model is not being run incrementally, the where statement is left out of the query.

{% if is_incremental() %}

where last_update_at > (select max(last_update_at) from {{ this }})

{% endif %}It’s possible to build very complex logic using Jinja, but we recommend trying to keep things simple and readable. Keep in mind that most people who use dbt are data analysts who need to understand how the SQL queries work, and may not have time to learn all the complex logical operators in Jinja.

For loops

Syntax: {% for... %}

You can loop over lists or sequences using Jinja for loops. That can be super useful in dbt macros as a way to handle logic that can be difficult in SQL alone. For example, if you need to UNION multiple variations of the same table that is stored across a changing set of database schemas.

In the dbt macro example below, we are using a variable called partners to create a list of partners we work with. For each partner, we have a separate database schema, but the sales table always uses the same columns. We need to union the tables together to get a complete list of partner sales.

{% set partners = ['costco', 'sams_club', 'target'] %}

{% for partner in partners %}

select *,

'{{ partner }}' as partner

from {{ source(partner, 'sales') }}

{# this adds union all to all selects except for the last one #}

{% if not loop.last %} union all {% endif %}

{% endfor %}The resulting query will look like this:

select *, 'costco' as partner

from costco.sales

union all

select *, 'sams_club' as partner

from sams_club.sales

union all

select *, 'target' as partner

from target.sales This for loop can be placed directly into a model file, or we can create a macro that can be referenced in multiple models. It’s also possible to adjust the code above slightly to be reusable if you have multiple tables that need to be union’d together (sales, customers, inventory, etc.)

Best practices for creating dbt macros

Building a dbt macro involves a systematic approach to make your SQL code more reusable and modular. Here's a step-by-step process to build a dbt macro:

- Identify Repetitive Patterns:

Before diving into macro creation, review your SQL code to identify repetitive patterns or logic. Macros are ideal for situations where you find yourself writing the same or similar SQL across multiple models. - Choose a Meaningful Name and create the macro file:

Name your macro in a way that reflects its purpose. Your goal should be clarity and maintainability. Also try to avoid too many macros that do very similar things. If possible, create a more generic macro with multiple inputs that can be reused in more places.

In your dbt project, within themacros/directory, create a new.sqlfile for your macro. The name of the file should match the macro's name. - Define the Macro:

Within the file you created, use the{% macro %}and{% endmacro %}tags to define the macro. Within these tags, write your SQL logic, replacing variable parts with Jinja placeholders.

In the example below, the macro has two inputs:json_column_nameto reference the column name that contains a JSON object, andnested_column_nameto reference the nested column within that JSON object that needs to be extracted.

{% macro from_json(json_column_name, nested_column_name) %}

{# trim double quotes due to the way Snowflake returns the parsed JSON #}

trim(

parse_json({{json_column_name}}):"{{nested_column_name}}",

'"')

{% endmacro %}- Leave comments to the macro:

It's a good practice to add comments (using{# ... #}) within the macro file to describe the technical details of how it works, or any programming decisions you’ve made while creating the macro. This ensures that other team members (or even your future self) can understand its purpose and usage. - Document the macro in a schema file:

In addition to comments in the macro code, you should add descriptions for the macro and inputs in your schema file. Just like models and columns, macros use the same syntax for documentation. You can create amacros.ymlfile in themacrosfolder of your dbt project so you have a convenient place to document your macros. Here’s an example schema file that defines two of the example macros we explored earlier in the article:

macros:

- name: to_reporting_timezone

description: A macro to convert UTC timestamps without a saved timezone into US Pacific time

arguments:

- name: column_name

type: timestamp

description: The name of the column you want to convert

- name: from_json

description: A macro to extract a nested column value out of a JSON column.

arguments:

- name: json_column_name

type: variant

description: The name of the JSON column that contains the nested column.

- name: nested_column_name

type: string

description: The name of the nested column to be extracted from the JSON column. - Test the Macro:

After writing your macro, use it within a model to ensure it works as expected. Remember, since macros are essentially templates, you'll need to call them from a model or another macro to see the final SQL output.

Remember, the primary objective of dbt macros is to make SQL code more maintainable and reduce redundancy. As you become more experienced with dbt and Jinja, the process of creating macros will become more intuitive, allowing you to focus on complex data transformations and modeling.

Creating dbt macros in PopSQL

Software engineers typically navigate the development process with dexterity, having honed their skills in environments that demand intricate understanding of code, version control, and collaboration tools. However, as the data landscape evolves, many data analysts find themselves adopting tools like dbt, diving into a world traditionally reserved for developers. For these analysts, the steep learning curve can be daunting.

This is where PopSQL can help. It offers a more intuitive, user-friendly interface to create dbt macros, bridging the gap between the realms of software development and data analysis. Even for those well-versed in technical intricacies, PopSQL provides a faster, more agile environment. Quick changes, which might entail a series of steps in local development setups, can be executed with remarkable speed in PopSQL, streamlining the process and boosting productivity.

PopSQL’s dbt Core integration runs on PopSQL’s servers so you can even make changes through a browser. All you need to do is grant git access to your dbt project and choose a database connection to use for dbt commands and previewing model results.

You can see the integration in action by watching this 3-minute video:

Conclusion

dbt macros, fueled by the power of Jinja, have undoubtedly paved the way for more dynamic and efficient data operations. By understanding the nuances of Jinja's syntax, the dbt macro-building process, and the role of dbt variables, teams can harness the true potential of their data pipelines. However, as with all techniques, having the right tools can make the journey smoother. PopSQL's integration with dbt exemplifies this, making the creation and testing of dbt macros not just feasible, but simple.